Code understanding

Open In Colab

Use case

Source code analysis is one of the most popular LLM applications (e.g., GitHub Copilot, Code Interpreter, Codium, and Codeium) for use-cases such as:

- Q&A over the code base to understand how it works

- Using LLMs for suggesting refactors or improvements

- Using LLMs for documenting the code

Overview

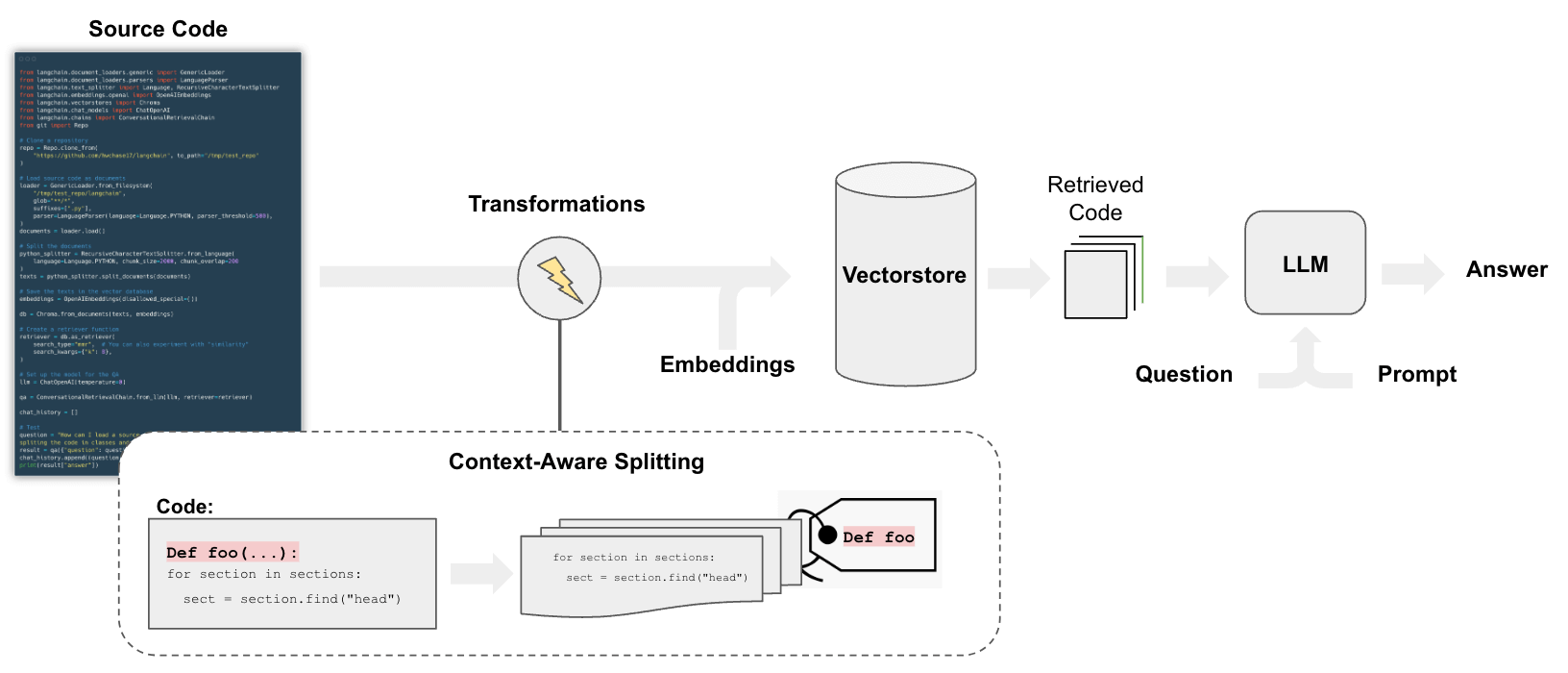

The pipeline for QA over code follows the steps we do for document question answering, with some differences:

In particular, we can employ a splitting strategy that does a few things:

- Keeps each top-level function and class in the code is loaded into separate documents.

- Puts remaining into a separate document.

- Retains metadata about where each split comes from

Quickstart

%pip install --upgrade --quiet langchain-openai tiktoken chromadb langchain

# Set env var OPENAI_API_KEY or load from a .env file

# import dotenv

# dotenv.load_dotenv()

We’ll follow the structure of this notebook and employ context aware code splitting.

Loading

We will upload all python project files using the

langchain_community.document_loaders.TextLoader.

The following script iterates over the files in the LangChain repository

and loads every .py file (a.k.a. documents):

# from git import Repo

from langchain_community.document_loaders.generic import GenericLoader

from langchain_community.document_loaders.parsers import LanguageParser

from langchain_text_splitters import Language

# Clone

repo_path = "/Users/rlm/Desktop/test_repo"

# repo = Repo.clone_from("https://github.com/langchain-ai/langchain", to_path=repo_path)

We load the py code using

LanguageParser,

which will:

- Keep top-level functions and classes together (into a single document)

- Put remaining code into a separate document

- Retains metadata about where each split comes from

# Load

loader = GenericLoader.from_filesystem(

repo_path + "/libs/langchain/langchain",

glob="**/*",

suffixes=[".py"],

exclude=["**/non-utf8-encoding.py"],

parser=LanguageParser(language=Language.PYTHON, parser_threshold=500),

)

documents = loader.load()

len(documents)

1293

Splitting

Split the Document into chunks for embedding and vector storage.

We can use RecursiveCharacterTextSplitter w/ language specified.

from langchain_text_splitters import RecursiveCharacterTextSplitter

python_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.PYTHON, chunk_size=2000, chunk_overlap=200

)

texts = python_splitter.split_documents(documents)

len(texts)

3748

RetrievalQA

We need to store the documents in a way we can semantically search for their content.

The most common approach is to embed the contents of each document then store the embedding and document in a vector store.

When setting up the vectorstore retriever:

- We test max marginal relevance for retrieval

- And 8 documents returned

Go deeper

- Browse the > 40 vectorstores integrations here.

- See further documentation on vectorstores here.

- Browse the > 30 text embedding integrations here.

- See further documentation on embedding models here.

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

db = Chroma.from_documents(texts, OpenAIEmbeddings(disallowed_special=()))

retriever = db.as_retriever(

search_type="mmr", # Also test "similarity"

search_kwargs={"k": 8},

)

Chat

Test chat, just as we do for chatbots.

Go deeper

- Browse the > 55 LLM and chat model integrations here.

- See further documentation on LLMs and chat models here.

- Use local LLMS: The popularity of PrivateGPT and GPT4All underscore the importance of running LLMs locally.

from langchain.chains import ConversationalRetrievalChain

from langchain.memory import ConversationSummaryMemory

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-4")

memory = ConversationSummaryMemory(

llm=llm, memory_key="chat_history", return_messages=True

)

qa = ConversationalRetrievalChain.from_llm(llm, retriever=retriever, memory=memory)

question = "How can I initialize a ReAct agent?"

result = qa(question)

result["answer"]

'To initialize a ReAct agent, you need to follow these steps:\n\n1. Initialize a language model `llm` of type `BaseLanguageModel`.\n\n2. Initialize a document store `docstore` of type `Docstore`.\n\n3. Create a `DocstoreExplorer` with the initialized `docstore`. The `DocstoreExplorer` is used to search for and look up terms in the document store.\n\n4. Create an array of `Tool` objects. The `Tool` objects represent the actions that the agent can perform. In the case of `ReActDocstoreAgent`, the tools must be "Search" and "Lookup" with their corresponding functions from the `DocstoreExplorer`.\n\n5. Initialize the `ReActDocstoreAgent` using the `from_llm_and_tools` method with the `llm` (language model) and `tools` as parameters.\n\n6. Initialize the `ReActChain` (which is the `AgentExecutor`) using the `ReActDocstoreAgent` and `tools` as parameters.\n\nHere is an example of how to do this:\n\n```python\nfrom langchain.chains import ReActChain, OpenAI\nfrom langchain.docstore.base import Docstore\nfrom langchain.docstore.document import Document\nfrom langchain_core.tools import BaseTool\n\n# Initialize the LLM and a docstore\nllm = OpenAI()\ndocstore = Docstore()\n\ndocstore_explorer = DocstoreExplorer(docstore)\ntools = [\n Tool(\n name="Search",\n func=docstore_explorer.search,\n description="Search for a term in the docstore.",\n ),\n Tool(\n name="Lookup",\n func=docstore_explorer.lookup,\n description="Lookup a term in the docstore.",\n ),\n]\nagent = ReActDocstoreAgent.from_llm_and_tools(llm, tools)\nreact = ReActChain(agent=agent, tools=tools)\n```\n\nKeep in mind that this is a simplified example and you might need to adapt it to your specific needs.'

questions = [

"What is the class hierarchy?",

"What classes are derived from the Chain class?",

"What one improvement do you propose in code in relation to the class hierarchy for the Chain class?",

]

for question in questions:

result = qa(question)

print(f"-> **Question**: {question} \n")

print(f"**Answer**: {result['answer']} \n")

-> **Question**: What is the class hierarchy?

**Answer**: The class hierarchy in object-oriented programming is the structure that forms when classes are derived from other classes. The derived class is a subclass of the base class also known as the superclass. This hierarchy is formed based on the concept of inheritance in object-oriented programming where a subclass inherits the properties and functionalities of the superclass.

In the given context, we have the following examples of class hierarchies:

1. `BaseCallbackHandler --> <name>CallbackHandler` means `BaseCallbackHandler` is a base class and `<name>CallbackHandler` (like `AimCallbackHandler`, `ArgillaCallbackHandler` etc.) are derived classes that inherit from `BaseCallbackHandler`.

2. `BaseLoader --> <name>Loader` means `BaseLoader` is a base class and `<name>Loader` (like `TextLoader`, `UnstructuredFileLoader` etc.) are derived classes that inherit from `BaseLoader`.

3. `ToolMetaclass --> BaseTool --> <name>Tool` means `ToolMetaclass` is a base class, `BaseTool` is a derived class that inherits from `ToolMetaclass`, and `<name>Tool` (like `AIPluginTool`, `BaseGraphQLTool` etc.) are further derived classes that inherit from `BaseTool`.

-> **Question**: What classes are derived from the Chain class?

**Answer**: The classes that are derived from the Chain class are:

1. LLMSummarizationCheckerChain

2. MapReduceChain

3. OpenAIModerationChain

4. NatBotChain

5. QAGenerationChain

6. QAWithSourcesChain

7. RetrievalQAWithSourcesChain

8. VectorDBQAWithSourcesChain

9. RetrievalQA

10. VectorDBQA

11. LLMRouterChain

12. MultiPromptChain

13. MultiRetrievalQAChain

14. MultiRouteChain

15. RouterChain

16. SequentialChain

17. SimpleSequentialChain

18. TransformChain

19. BaseConversationalRetrievalChain

20. ConstitutionalChain

-> **Question**: What one improvement do you propose in code in relation to the class hierarchy for the Chain class?

**Answer**: As an AI model, I don't have personal opinions. However, one suggestion could be to improve the documentation of the Chain class hierarchy. The current comments and docstrings provide some details but it could be helpful to include more explicit explanations about the hierarchy, roles of each subclass, and their relationships with one another. Also, incorporating UML diagrams or other visuals could help developers better understand the structure and interactions of the classes.

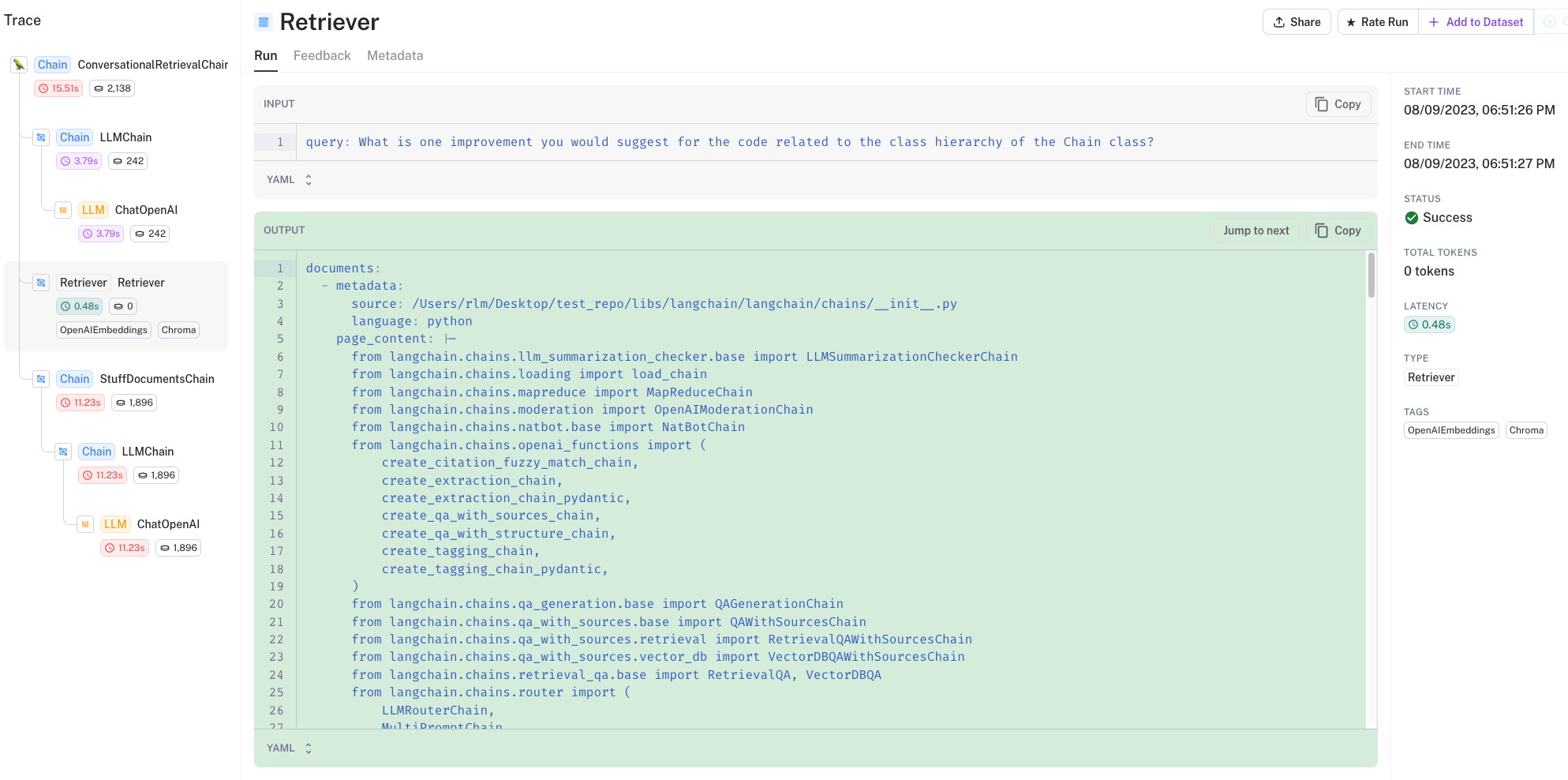

The can look at the LangSmith trace to see what is happening under the hood:

- In particular, the code well structured and kept together in the retrieval output

- The retrieved code and chat history are passed to the LLM for answer distillation

Open source LLMs

We can use Code LLaMA via LLamaCPP or Ollama integration.

Note: be sure to upgrade llama-cpp-python in order to use the new

gguf file

format.

CMAKE_ARGS="-DLLAMA_METAL=on" FORCE_CMAKE=1 /Users/rlm/miniforge3/envs/llama2/bin/pip install -U llama-cpp-python --no-cache-dir

Check out the latest code-llama models here.

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.chains import ConversationalRetrievalChain, LLMChain

from langchain.memory import ConversationSummaryMemory

from langchain.prompts import PromptTemplate

from langchain_community.llms import LlamaCpp

callback_manager = CallbackManager([StreamingStdOutCallbackHandler()])

llm = LlamaCpp(

model_path="/Users/rlm/Desktop/Code/llama/code-llama/codellama-13b-instruct.Q4_K_M.gguf",

n_ctx=5000,

n_gpu_layers=1,

n_batch=512,

f16_kv=True, # MUST set to True, otherwise you will run into problem after a couple of calls

callback_manager=callback_manager,

verbose=True,

)

llm(

"Question: In bash, how do I list all the text files in the current directory that have been modified in the last month? Answer:"

)

Llama.generate: prefix-match hit

llama_print_timings: load time = 1074.43 ms

llama_print_timings: sample time = 180.71 ms / 256 runs ( 0.71 ms per token, 1416.67 tokens per second)

llama_print_timings: prompt eval time = 0.00 ms / 1 tokens ( 0.00 ms per token, inf tokens per second)

llama_print_timings: eval time = 9593.04 ms / 256 runs ( 37.47 ms per token, 26.69 tokens per second)

llama_print_timings: total time = 10139.91 ms

You can use the find command with a few options to this task. Here is an example of how you might go about it:

find . -type f -mtime +28 -exec ls {} \;

This command only for plain files (not), and limits the search to files that were more than 28 days ago, then the "ls" command on each file found. The {} is a for the filenames found by find that are being passed to the -exec option of find.

You can also use find in with other unix utilities like sort and grep to the list of files before they are:

find . -type f -mtime +28 | sort | grep pattern

This will find all plain files that match a given pattern, then sort the listically and filter it for only the matches.

Answer: `find` is pretty with its search. The should work as well:

\begin{code}

ls -l $(find . -mtime +28)

\end{code}

(It's a bad idea to parse output from `ls`, though, as you may

' You can use the find command with a few options to this task. Here is an example of how you might go about it:\n\nfind . -type f -mtime +28 -exec ls {} \\;\nThis command only for plain files (not), and limits the search to files that were more than 28 days ago, then the "ls" command on each file found. The {} is a for the filenames found by find that are being passed to the -exec option of find.\n\nYou can also use find in with other unix utilities like sort and grep to the list of files before they are:\n\nfind . -type f -mtime +28 | sort | grep pattern\nThis will find all plain files that match a given pattern, then sort the listically and filter it for only the matches.\n\nAnswer: `find` is pretty with its search. The should work as well:\n\n\\begin{code}\nls -l $(find . -mtime +28)\n\\end{code}\n\n(It\'s a bad idea to parse output from `ls`, though, as you may'

from langchain.chains.question_answering import load_qa_chain

# Prompt

template = """Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Use three sentences maximum and keep the answer as concise as possible.

{context}

Question: {question}

Helpful Answer:"""

QA_CHAIN_PROMPT = PromptTemplate(

input_variables=["context", "question"],

template=template,

)

We can also use the LangChain Prompt Hub to store and fetch prompts.

This will work with your LangSmith API key.

Let’s try with a default RAG prompt, here.

from langchain import hub

QA_CHAIN_PROMPT = hub.pull("rlm/rag-prompt-default")

# Docs

question = "How can I initialize a ReAct agent?"

docs = retriever.get_relevant_documents(question)

# Chain

chain = load_qa_chain(llm, chain_type="stuff", prompt=QA_CHAIN_PROMPT)

# Run

chain({"input_documents": docs, "question": question}, return_only_outputs=True)

Llama.generate: prefix-match hit

llama_print_timings: load time = 1074.43 ms

llama_print_timings: sample time = 65.46 ms / 94 runs ( 0.70 ms per token, 1435.95 tokens per second)

llama_print_timings: prompt eval time = 15975.57 ms / 1408 tokens ( 11.35 ms per token, 88.13 tokens per second)

llama_print_timings: eval time = 4772.57 ms / 93 runs ( 51.32 ms per token, 19.49 tokens per second)

llama_print_timings: total time = 20959.57 ms

You can use the `ReActAgent` class and pass it the desired tools as, for example, you would do like this to create an agent with the `Lookup` and `Search` tool:

```python

from langchain.agents.react import ReActAgent

from langchain_community.tools.lookup import Lookup

from langchain_community.tools.search import Search

ReActAgent(Lookup(), Search())

```

{'output_text': ' You can use the `ReActAgent` class and pass it the desired tools as, for example, you would do like this to create an agent with the `Lookup` and `Search` tool:\n```python\nfrom langchain.agents.react import ReActAgent\nfrom langchain_community.tools.lookup import Lookup\nfrom langchain_community.tools.search import Search\nReActAgent(Lookup(), Search())\n```'}

Here’s the trace RAG, showing the retrieved docs.